Agents are for loops and some ifs

Don’t use agent libraries. Abstractions can be powerful but when dealing with emerging technologies they are definitely overkill and can even burden your projects.

That is especially true for generative AI and even more so for agents.

I believe there is an ever-growing trend of embedding simplicity within complexity to obfuscate that the stuff we do is pretty basic. And we call that abstractions while that should be reserved for stuff that has complexity and that we can build layers to deal with due to that.

To be fair, there isn’t a consensus on what are agents and AI greatest minds are using loopholes not to define what it is, calling the behaviours “agentic”.

One reason for machine learning’s success is that our field welcomes a wide range of work. I can’t think of even one example where someone developed what they called a machine learning algorithm and senior members of our community criticized it saying, “that’s not machine… pic.twitter.com/JvVgrjubsW

— Andrew Ng (@AndrewYNg) June 13, 2024

At the end we end up with having agents libraries, SDKs, orchestration frameworks that all more or less seem to do the same thing: preying on tool/function calling capabilities of LLMs to hope that the right tool will be called and then try to mitigate the number of times it will fail by using retries, asking LLM what tools they should call - adding a costly round-trip in the equation -

The uncertainty of it

Even LLM providers are not sure on how to deal with agents. As often with emerging technology we try to find ways around the limitations of the new medium we are using. Tool and function calling - one of the core mechanisms driving agentic behaviours - is not a solved problem. Looking at Anthropic own documentation we can tell that uncertainty is total. For a weather API call they are not even sure if the LLM is smart enough to understand that it needs to call two tools at once to answer the question. And the way they phrased it sounds like most of the time it can’t:

In this case, Claude will most likely try to use two separate tools, one at a time —get_weatherand thenget_time— in order to fully answer the user’s question. However, it will also occasionally output twotool_useblocks at once, particularly if they are not dependent on each other. You would need to execute each tool and return their results in separatetool_resultblocks within a singleusermessage. anthropic multiple tool example

This means you will lose money and time interacting with the LLM in a few round trips adding crazy latency, potential throttling to every query you try and the more tools you work with the more complex it will become.

Agents are russian dolls of unnecessary abstractions

Coming back to the notion of Agents... Agents/orchestration frameworks want you to believe that agents should be abstractions that you build on the top of those tool calling and that would allow you to segment those. For instance, you could use domain segmentation with a weather agent that contains our two previous tools and then another agent that would do something totally different like a real estate agent. they can interact with each other for some edge cases and use some kind of orchestrating layer to do so.

to manage their own tools while enabling

cross-domain communication when needed] classDef note fill:#fff,stroke:#333,stroke-width:1px class note note

This is a nice way to fight against the non-deterministic aspect of LLMs by reducing complexity of each agents - and unfortunately adding latency and calls to the LLM at the same time.. - But why would you need an orchestrator for that? and more importantly what this orchestrator would do? most of the time nothing much but adding one round-trip to an LLM to ask which tools to call with maybe some retry logic and other minors stuffs that would take 1-2 lines of code to implement.

Discussing function calls / tool use accuracy

Most of the complexity we are building is to fight against the limitations of LLMs. If in some way we could embed all our tools in a single call and have the LLM successfully figure out which tools to call then that would be it and we wouldn’t need any of those frameworks or libraries. But it turns out the reality of agents and tool use is pretty stark and you wouldn’t know that looking at the AI influencer spamming X, Linkedin, Youtube… The only thing that sucks more than that is RAG

The state of multi-turn function calling and agents

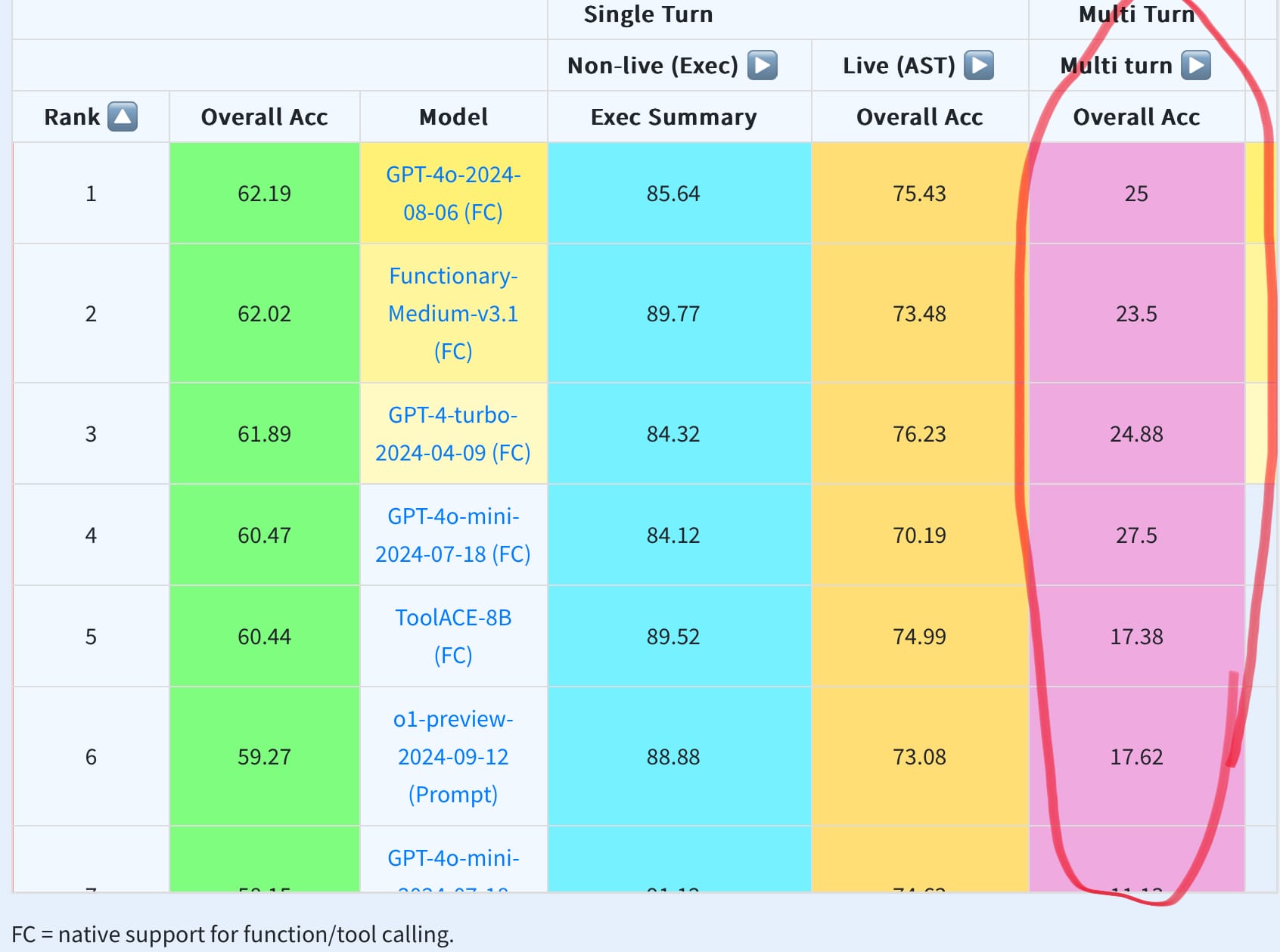

Let’s look at Function Calling Leaderboards just to confirm it. Even here they decided to abstract the data behind some average. But when looking at agentic behaviors the really important whole defining criteria is the ability to not limit the agent to one behavior/call in a turn. For complex queries you might need to use a lot of tools and if this ends up being round trips for every call you make then your application is not scalable and will forever remain an MVP that you showcase for your next promodoc. And we can see that for multi turn even the best models are not above 25…

At the end, we use abstraction to abstract the fact that the stuff does not work most of the time and that agents are just a for loop with some ifs....